The Rise of AI Agents: What They Are and Why They Matter

AI agents are software systems that can plan, decide, and act autonomously to achieve defined goals. This article explains what AI agents are, how they work, where they are being used today, and the practical limitations organizations must understand.

The Rise of AI Agents: What They Are and Why They Matter

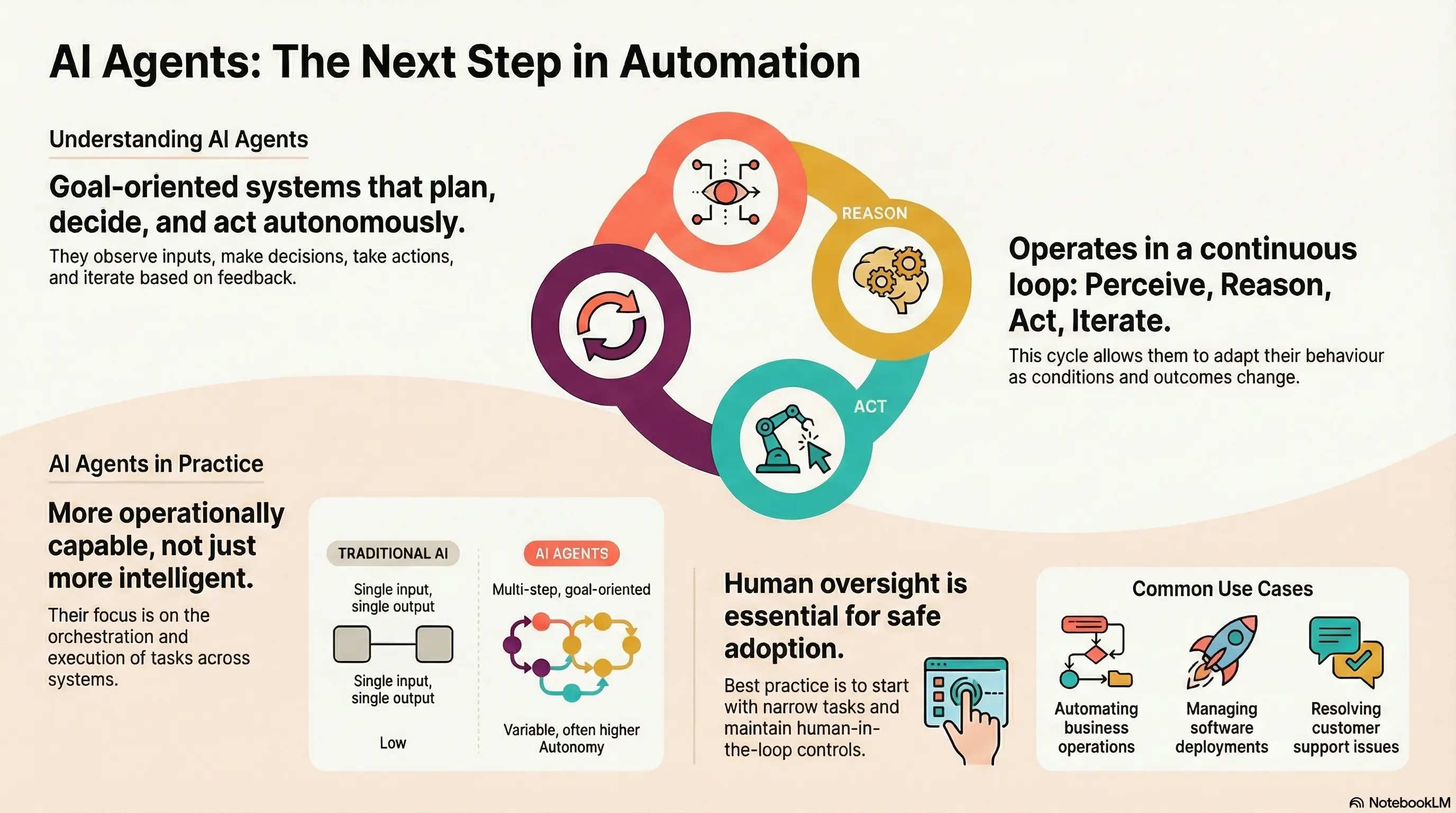

AI agents represent a significant shift in how artificial intelligence systems are designed and deployed. Rather than responding to single prompts or executing isolated tasks, AI agents are built to pursue goals, make decisions over time, and interact with tools, systems, and environments with increasing autonomy. For a discussion on the evolution of AI systems towards more autonomous agents, see Russell & Norvig, 2021, Artificial Intelligence: A Modern Approach. For insights into multi-agent systems, refer to Wooldridge, 2009, An Introduction to MultiAgent Systems.

Understanding what AI agents are—and what they are not—is essential for realistic adoption and responsible use.

What Are AI Agents?

An AI agent is a software system that can:

- Observe inputs from its environment

- Make decisions based on goals and context

- Take actions using available tools or interfaces

- Iterate based on outcomes and feedback

Unlike traditional AI models that produce a single output per request, agents operate across multiple steps, often maintaining state and adapting their behavior as conditions change.

AI agents may be fully automated, semi-autonomous, or human-supervised, depending on the design and risk profile.

How AI Agents Work

While implementations vary, most AI agents combine several core components.

Perception and Input Handling

Agents receive information from sources such as:

- User instructions

- Databases and APIs

- Documents or knowledge bases

- System events or sensor data

This input provides context for decision-making.

Reasoning and Planning

Agents typically use a reasoning layer to determine what actions to take next. This may involve:

- Breaking a goal into sub-tasks

- Selecting tools or actions

- Evaluating constraints and priorities

Modern agents often rely on large language models to support reasoning and planning, which are crucial for understanding complex instructions and generating coherent plans. For insights into how language models contribute to reasoning capabilities, see Brown et al., 2020, Language Models are Few-Shot Learners. For a discussion on the opportunities and risks of foundation models, refer to Bommasani et al., 2021, On the Opportunities and Risks of Foundation Models.

Action Execution

Agents can perform actions such as:

- Calling APIs

- Writing or modifying files

- Sending messages

- Triggering workflows

- Querying systems

These actions allow agents to affect external systems rather than merely generate text.

Feedback and Iteration

After acting, agents evaluate results and decide whether to continue, adjust, or stop. This loop distinguishes agents from single-step AI interactions.

AI Agents vs Traditional AI Systems

The following table compares AI agents with traditional AI systems:

| Characteristic | Traditional AI | AI Agents |

|---|---|---|

| Interaction model | Single input, single output | Multi-step, goal-oriented |

| State management | Often stateless | Maintains context over time |

| Tool usage | Limited or manual | Integrated and autonomous |

| Autonomy | Low | Variable, often higher |

| Typical use | Prediction or generation | Orchestration and execution |

AI agents are not inherently more intelligent, but they are more operationally capable, as discussed in various expert analyses. See Russell & Norvig, 2021, Artificial Intelligence: A Modern Approach for more details.

Common Types of AI Agents

- Task-Oriented Agents: Designed to complete specific objectives such as scheduling, reporting, or data processing. These agents often operate within well-defined boundaries.

- Workflow and Automation Agents: Used to coordinate multi-step business processes across systems, such as onboarding, compliance checks, or content pipelines.

- Conversational Agents with Agency: More advanced chat-based systems that can take actions, retrieve information, and perform tasks on behalf of users rather than only responding to questions.

- Multi-Agent Systems: Architectures where multiple agents interact, collaborate, or compete, each with distinct roles. These systems are an active area of research and experimentation.

Why AI Agents Are Gaining Momentum

Several factors have accelerated interest in AI agents:

- Improved language and reasoning models

- Broader access to APIs and automation platforms

- Demand for end-to-end task execution, not just insights

- Maturation of cloud infrastructure and orchestration tools

Together, these factors make it feasible to deploy agents that perform meaningful work within controlled environments.

Practical Use Cases

AI agents are being explored and deployed in areas such as:

- Business operations: Automating routine processes across tools

- Software development: Managing tickets, tests, and deployments

- Customer support: Resolving issues through multi-step workflows

- Data operations: Collecting, cleaning, and summarizing information

- Personal productivity: Managing calendars, emails, and research tasks

In most production settings, agents operate under strict guardrails and human oversight.

Limitations and Risks

Despite their promise, AI agents introduce new challenges:

- Reliability and Error Propagation: Because agents act across multiple steps, early mistakes can compound if not detected and corrected.

- Control and Oversight: Greater autonomy increases the importance of monitoring, logging, and intervention mechanisms.

- Security and Access Management: Agents often require system access. Poorly designed permissions can introduce security risks.

- Evaluation Difficulty: Assessing agent performance is more complex than evaluating single-model outputs, as outcomes depend on sequences of actions.

Best Practices for Adoption

Organizations adopting AI agents commonly follow several principles:

- Start with narrow, well-defined tasks

- Enforce explicit boundaries and permissions

- Maintain human-in-the-loop controls for critical actions

- Log decisions and actions for auditability

- Continuously test and refine behavior

These practices help align agent capabilities with operational reality.

The Role of Humans

AI agents do not replace accountability. Humans remain responsible for:

- Defining goals and constraints

- Approving high-impact actions

- Interpreting outcomes

- Managing ethical, legal, and compliance considerations

Effective systems treat agents as collaborators, not independent actors.

Key Takeaways

AI agents are goal-oriented systems capable of multi-step decision-making and action. They differ from traditional AI by maintaining context and executing workflows. Most agents rely on a combination of models, rules, tools, and feedback loops. Real-world value depends on careful design, governance, and oversight. The rise of AI agents reflects a shift toward operational AI, not autonomous intelligence.